According to a new report printed by the Tech Transparency Project (TTP), the algorithm of Facebook has automatically formed more than 100 pages on the social media of US-designated terrorist groups like Al-Qaeda and Islamic State.

TTP is a non-profit regulator organisation Campaign for Accountability's explore initiative which according to the TTP website, "uses research, litigation and antagonistic communications to expose misconduct and malfeasance in community life". TTP ongoing as the Google Transparency Project in 2016 and today it has extended and covers numerous major tech companies. A non-profit watchdog formed during the Reagan administration, the Capital Research Centre, has called TTP a left-leaning platform though the group does not right to have any political set.

According to TTP's report, 108 pages for Islamic State were formed by Facebook, along with lots of other pages be in the right place to other terrorist groups, which embraces Al-Qaeda. The report more appealed that the platform automatically created the terrorist group pages as users "checked in" to terrorist organisations or registered the groups in their profiles.

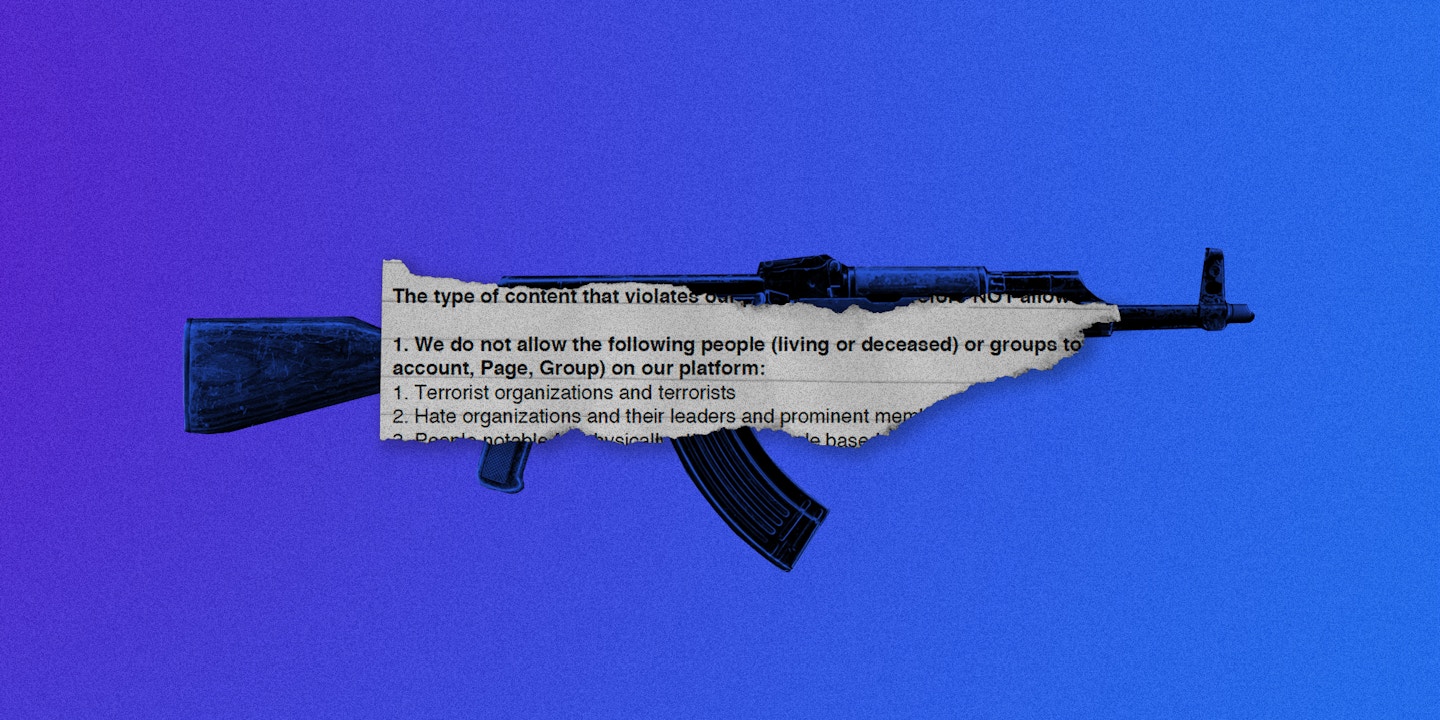

In its report, the Tech Transparency Project further appealed that these pages were produced by Facebook in spite of its policy banning Al-Qaeda and Islamic State and dues of its algorithm being skilled to detect them.

Top of FormBottom of Form

Legal Responsibility of Facebook

Facebook’s volatile growth into the world’s largest societal networking website has taken place, in substantial part, due to its having persuaded officials that it is merely a platform for content produced by others, not a producer of its own content. This declaration has been central to its facility not only to avoid regulation but also to uphold immunity from liability in civil litigation.

Image Source - www.google.com

Now that our whistle-blower has exposed that terror and hate content is being engendered by Facebook itself and not by “another information content provider,” Facebook’s aptitude to claim Section 230 immunity in cases filed by people of terror victims has been placed into question.

Why the Securities and Exchange Commission?

Facebook is well attentive that its web site is now being used by terror and hate groups to accomplish their destructive goals. Apparently, it has decided that it is no longer rational for it to claim that it cannot be accountable for terror and hate content.

Shareholders be subject to on accurate information to guide their decisions on whether to finance in a company. As owners, they are in a sole position to force management to approve corporate social responsibility practices that are essential to continuing the company’s social license and long-term productivity.

Five Key for Findings in the Petition

The whistle-blower analysed 3,228 Facebook profiles of individuals voicing connection with terror or hate groups. The analysis formed the following key findings:

1. TERROR AND HATE SPEECH AND PICTURESARE BOOMING ON FACEBOOK

The whistle-blower initiates those 317 profiles out of the 3,228 surveyed confined the flag or symbol of a terrorist group in their profile images, cover photo, or presented photos on their publicly reachable profiles. The study also details hundreds of other individuals who had visibly and openly shared images, posts, and advertising of ISIS, Al Qaeda, the Taliban and other known terror groups, including media that seemed to be of their own militant activity.

2. CONTRARY TO ITS PLEDGES, FACEBOOK HAS NO EVOCATIVE TACTIC FOR ELIMINATING THIS TERROR AND HATE CONTENT FROM ITS WEBSITE

Facebook has allegedly accepted liability for terror and hate content on Facebook. During an April 2018 presence before a congressional board, CEO Mark Zuckerberg stated: “When people ask if we’re a media company what I heard is, ‘Do we have a concern for the content that people share on Facebook,’ and I have faith in the answer to that question is yes.” Facebook has constantly stated that it chunks 99% of the activity of battered terrorist groups such as ISIS and al-Qaeda without the need for user broadcasting.

3. FACEBOOK IS CREATING ITS OWN TERROR AND HATE CONTENT, WHICH IS BEING LIKED BY INDIVIDUALS ASSOCIATED WITH TERRORIST ESTABLISHMENTS

Facebook’s problem with terror and hate content goes out there just its misleading accounts about its removal of content that violates community values. Facebook has also never addressed the matter that itactively endorses terror and hate contentdiagonally the website via its auto-generated feature.

In multiple documented cases, Facebook permitted networking and recruiting by repurposing user-generated content and auto-generating pages, logos, promotional videos, and other marketing.

For example, Facebook auto-generated “Local Business” pages for terrorist groups using the job descriptions that users placed in their profiles. Facebook also auto-filled terror icons, branding, and flags that seem when a user searches for members of that group on the platform.

4. FACEBOOK IS PROVIDING AN INFLUENTIAL NETWORKING AND EMPLOYMENT TOOL TO TERRORIST AND HATE GROUPS

The whistle-blower found that entities who elect to become Friends of terrorist groups, with ISIS and al-Qaeda, share terror-related content recurrently and openly on Facebook. For example, the whistle-blower’s quests in Arabic for the names of other terror groups like Boko Haram, Al Shabaab, and Hay’at Tahrir Al-Sham directly uncover Facebook pages, jobs, and profiles expressing association with and sustenance for those fanatical groups. All of this seems to be part of an ongoing attempt by terrorist groups to network and employee new members.

5. FACEBOOK HAS APPEALED IT IS NOT A CONTENT PROVIDER, FAILING TO REVEAL THAT IT IS CREATING TERROR AND HATE CONTENT

Facebook put its shareholders at risk by fading to fully disclose to them its obligation risk. Historically, Facebook has been promoted from Section 230 of the Communications Decorum Act, which provides resistance from tort liability to certain internet companies that serve as hosts of content formed by others. To the extent that Facebook is auto-generating its own terror content and this content, in turn, enables networking and injurious acts by terrorists or white supremacists, Facebook may no longer be protected from tort liability under the CDA.

BY SANJANA PANDEY